<summary>How to use multiple ChatGPT-compatible interfaces (or dedicated interfaces) simultaneously?</summary>

If you simply have multiple different keys and want to poll them, just separate them with a `|`.<br>

However, sometimes you want to use multiple different API addresses/prompts/models/parameters at the same time to compare translation effects. The method is:<br>

A window will pop up, select ChatGPT-compatible interface (or dedicated interface), and give it a name. This will copy the current ChatGPT-compatible interface (or dedicated interface) settings and API.

Activate the copied interface and you can make individual settings. The copied interface can run with the original interface, allowing you to use multiple different settings.

>**model** can be selected from a dropdown list, and some interfaces can dynamically fetch the model list based on the **API Interface Address** and **API Key**. After filling in these two fields, click the refresh button next to **model** to obtain the available model list. If the platform does not support the model retrieval interface and the default list does not include the required model, please refer to the official API documentation to manually enter the model.

>Most large model platforms use ChatGPT-compatible interfaces.<br>Since there are so many platforms, it's impossible to list them all. For other interfaces not listed, please refer to their documentation to fill in the corresponding parameters.

**API Key** **The API Key must be set to empty, otherwise it will result in an error.**

**Model** You can view the currently available models at [https://deepinfra.com/chat](https://deepinfra.com/chat). As of the time of writing this document, the freely available models are: `meta-llama/Meta-Llama-3.1-405B-Instruct``meta-llama/Meta-Llama-3.1-70B-Instruct``meta-llama/Meta-Llama-3.1-8B-Instruct``mistralai/Mixtral-8x22B-Instruct-v0.1``mistralai/Mixtral-8x7B-Instruct-v0.1``microsoft/WizardLM-2-8x22B``microsoft/WizardLM-2-7B``Qwen/Qwen2.5-72B-Instruct``Qwen/Qwen2-72B-Instruct``Qwen/Qwen2-7B-Instruct``microsoft/Phi-3-medium-4k-instruct``google/gemma-2-27b-it``openbmb/MiniCPM-Llama3-V-2_5``mistralai/Mistral-7B-Instruct-v0.3``lizpreciatior/lzlv_70b_fp16_hf``openchat/openchat_3.5``openchat/openchat-3.6-8b``Phind/Phind-CodeLlama-34B-v2``Gryphe/MythoMax-L2-13b``cognitivecomputations/dolphin-2.9.1-llama-3-70b`

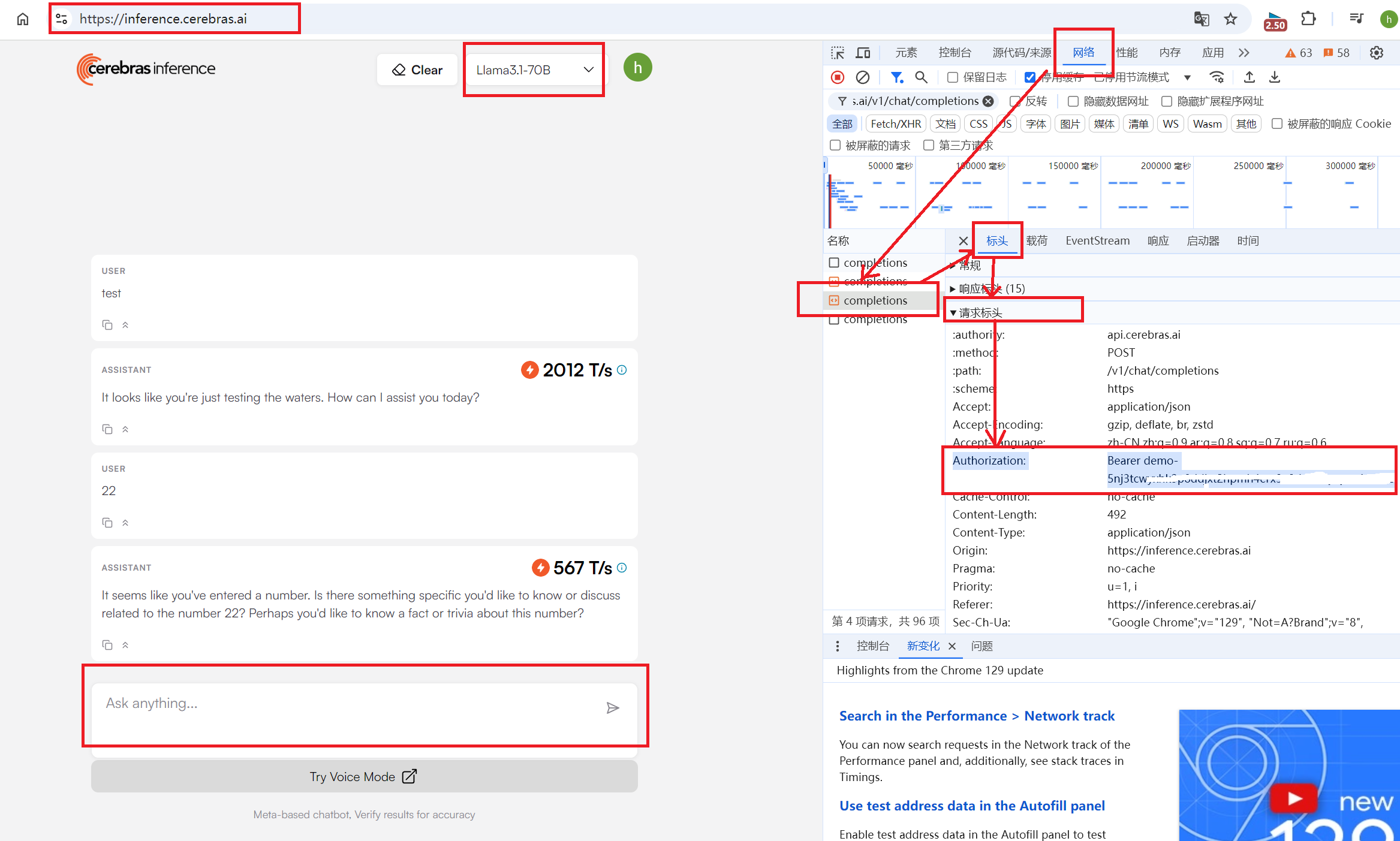

**API Key** After selecting a model and sending a message on [https://inference.cerebras.ai](https://inference.cerebras.ai/), you can intercept the request and check the current `Headers` -> `Request Headers` -> `Authorization` value, which is `Bearer demo-xxxxhahaha`, where `demo-xxxxhahaha` is the API Key.

**API Key** [Create API Key](https://console.volcengine.com/ark/region:ark+cn-beijing/apiKey?apikey=%7B%7D) to obtain

**model** [Create Inference Access Point](https://console.volcengine.com/ark/region:ark+cn-beijing/endpoint?current=1&pageSize=10), fill in **Access Point** instead of **Model**

**API Key** Refer to the [official documentation](https://www.xfyun.cn/doc/spark/HTTP%E8%B0%83%E7%94%A8%E6%96%87%E6%A1%A3.html#_3-%E8%AF%B7%E6%B1%82%E8%AF%B7%E6%B1%82%E6%B1%82%E8%AF%B7%E6%B1%82%E6%B1%82%E8%AF%B7%E6%B1%82) to obtain **APIKey** and **APISecret**, fill in according to the format of **APIKey:APISecret**

!> For the **API Key**, please use the Access Key and Secret Key from Baidu AI Cloud IAM to generate a Bearer Token, which should then be entered as the **API Key**, or directly enter both in the format `{Access Key}:{Secret Key}` in the **API Key** field. Note that this is different from the API Key and Secret Key for the old v1 version of Qianfan ModelBuilder; they are not interchangeable.